Posts Tagged ‘Amazon’

The perils of running servers at Amazon EC2

Last week, some of our developers complained that they could not access our server that we have hosted at Amazon Web Services (AWS) / Elastic Cloud Computing (EC2) environment intermittently every few minutes, but I was having no problems.

The next day, I was now unable to get to the server.

HTTP, HTTPS, SSH all failed.

Cloudwatch showed all metrics as “flat” for the few minutes after I stopped getting a response.

I would like to note that we have been using this server for approximately 6 months without any problems whatsoever. We have a very standard procedure for accessing the server, and we had tested many things before reporting the problem to Amazon. We were sure that we were not mis-typing the SSH host name, and were using the correct instance ID.

Additionally, I saw lots of other posts in the Amazon EC2 support discussion forum which were similar to this post about suddenly not being able to access their server that same morning and/or the night before.

The end result was that Amazon notified us that quote: “There does appear to be a problem with the underlying hardware.” and “I would relaunch the instance using your latest AMI. Since this instance is not EBS, what ever changes you made may be lost.”

Here are some hard lessons that I learned from the experience.

A) As redundant and high availability oriented we thought Amazon was, IT IS NOT.

B) Amazon’s support in such incidents is actually just a discussion forum for the various services that it offers. In this case, it is the EC2 discussion forum, where you are lumped in with thousands of other people running potentially hundreds of other AMIs that contain unlimited number of “popular software” on several popular operating systems. In other words, there is alot of traffic on there because of people running Windows, Oracle, WordPress, Linux (all the flavors you can think of), etc. etc. etc. The traffic is so high that your chances of getting responded to depend on the Amazon staff manning the forum, and whether your needle doesn’t get lost in the high traffic hay stack.

C) Amazon does offer phone support, but this is only available from the Gold and Platinum support levels. Gold costs $400/month, so unless you are enterprise, you can forget it. Bronze and Silver cost $49 and $100/month respectively.

Full details: http://aws.amazon.com/premiumsupport/

I was really surprised that there was nothing for the average joe running a couple of servers. It costs me more a month to run at Amazon than it does my dedicated server hosting company, yet at least I can get someone on the phone at the dedicated server hosting company.

D) Amazon’s attitude in their response and the response I’ve seen many times since having this issue is that you should never rely on an instance whatsoever. Count on it going down at any time. Instead, have load balancers and custom built, EBS boot AMIs ready to go at any time.

This is a difficult philosophy to totally grasp, but one that must be grasp if you are moving to the cloud.

E) We actually had an EBS volume attached where our /var/www and MySQL resides. We had previously tested disaster recovery in the event the instance went away and had successfully detached, spun up a new instance, and re-attached the volume and ran a script to let the server know where the /var/www and MySQL data was.

This is a good practice for the data, but it should also be the case for the server.

F) Instance store is no way to go, unless you are literally spinning up a temporary test server to run for a few minutes or a few hours, or you are spinning up an instance for the CPU cycles.

G) EBS Boot instance should really be the rule of thumb, and generate a master AMI from it. If you make changes (periodic operating system updates, etc.) – also periodically re-bundle your AMI.

H) Make sure your data is also stored on a separate EBS volume, and make automatic nightly snapshots of that EBS volume. Think of EBS snapshotting in the same way you think of incremental backups in the tradition IT infrastructure sense.

By having your data on a separate EBS volume, you don’t necessarily have to re-bundle your AMI every night.

Someone out in the websphere correct me if I’m wrong, but it seems to me that snapshotting a data EBS volume every night is easier than re-bundling your instance every night in the case that you store your data on the same EBS volume as your instance operating system and software.

Thoughts and feedback appreciated.

Problem creating an AMI from a running instance with ec2-bundle-vol

I’m writing this blog post in the hopes that some wise soul out there happens to read this post and responds with the answer. I am truly stumped.

I’m running an instance store instance at Amazon. I would like to bundle a custom AMI in case something happens to the instance as it did to our instance a few days ago.

Our instance is built from a high availability, high performance AMI built by Pantheon. After we spin up the instance, we still have a bit of customizations. Namely setting up the mail/mailboxes, setting the hostname, installing Webmin, etc. etc.

We wanted to bundle our customizations into a new AMI in case something happened to the instance again and we could simply spin up a new instance from the AMI, change the public DNS and MX record, and re-attach the EBS volume where our /var/www and MySQL data is located.

After searching various and sundry locations on the web on how to re-bundle an AMI from a running instance, I came up with the following commands.

I’m using this command where 123456789123 is not my actual Amazon user ID, and I’ve copied my Amazon private key and certificate to the /mnt/ids directory.

$ sudo ec2-bundle-vol -d /mnt/amis -k /mnt/ids/pk-*.pem -c /mnt/ids/cert-*.pem -u 123456789123 -r i386

I get the following:

Copying / into the image file /mnt/amis/image... Excluding: /sys/kernel/debug /sys/kernel/security /sys /var/log/mysql /var/lib/mysql /mnt/mysql /proc /dev/pts /dev /dev /media /mnt /proc /sys /etc/udev/rules.d/70-persistent-net.rules /etc/udev/rules.d/z25_persistent-net.rules /mnt/amis/image /mnt/img-mnt 1+0 records in 1+0 records out 1048576 bytes (1.0 MB) copied, 0.00273375 s, 384 MB/s mkfs.ext3: option requires an argument -- 'L' Usage: mkfs.ext3 [-c|-l filename] [-b block-size] [-f fragment-size] [-i bytes-per-inode] [-I inode-size] [-J journal-options] [-G meta group size] [-N number-of-inodes] [-m reserved-blocks-percentage] [-o creator-os] [-g blocks-per-group] [-L volume-label] [-M last-mounted-directory] [-O feature[,...]] [-r fs-revision] [-E extended-option[,...]] [-T fs-type] [-U UUID] [-jnqvFKSV] device [blocks-count] ERROR: execution failed: "mkfs.ext3 -F /mnt/amis/image -U 2c567c84-20a1-44b9-a353-dbdcc7ae863b -L "

Here are the results of df -h, so I know I’ve got plenty of room on /mnt:

Filesystem Size Used Avail Use% Mounted on /dev/sda1 9.9G 2.1G 7.4G 22% / devtmpfs 834M 124K 834M 1% /dev none 851M 0 851M 0% /dev/shm none 851M 96K 851M 1% /var/run none 851M 0 851M 0% /var/lock none 851M 0 851M 0% /lib/init/rw /dev/sda2 147G 352M 139G 1% /mnt /dev/sdf 1014M 237M 778M 24% /vol

Does anybody have any clue what the mkfs.ext3 errors are all about? I’ve posted this over at the Amazon EC2 discussion forum and the Pantheon Drupal group with no response and I have scoured google and I can’t find anything like this.

Simplifying SSH command to access Amazon EC2 server

If you are like me and you have to push the up arrow in your terminal window each time you need to remember exactly what the command is to access your Amazon EC2 server, here is a time saving SSH configuration trick for you.

example:

$ cd ~/.ec2 $ ssh -i davidskey.pem ubuntu@ec2-69-37-131-80.eu-west-1.compute.amazonaws.com

You can create a config file in your ~/.ssh directory to include your identity file for individual host names.

If your Amazon server has a public DNS entry which points to the long Amazon public DNS name, you can make this even easier.

$ cd ~/.ssh $ ls -al (if you don't find a file called "config" you can create one using the following line) $ touch config $ nano config

Add these lines: (assuming that dev.davidsdomain.com is a CNAME DNS entry which points to ec2-69-37-131-80.eu-west-1.compute.amazonaws.com.

Host dev.davidsdomain.com IdentityFile ~/.ec2/davidskey.pem

Host ec2-69-37-131-80.eu-west-1.compute.amazonaws.com IdentityFile ~/.ec2/davidskey.pem

Now you can SSH to both domain names using only the following. Also note that you do not have to change to the ~/.ec2/ directory to issue the SSH command anymore.

$ ssh ubuntu@dev.davidsdomain.com $ ssh ubuntu@ec2-69-37-131-80.eu-west-1.compute.amazonaws.com

One final note, is that this would work on any host that you use a keyfile to access via SSH. This is of course, not limited to Amazon EC2 server access.

Customizations for a Trac & Git install

I spent 2 days setting up my trac server the way I wanted it. Here’s what I did.

These instructions will help you get going faster than I did. There are a few things that need to be tweaked to get it working, and a couple of modules that will make administration easier.

My setup has the following requirements:

No anonymous/public access, SSL traffic, accountmanager plugin to add and configure users through the trac webadmin interface, and gitplugin for use with a Git repository.

These instructions also assume that you are using a Trac appliance AMI at Amazon Web Services from Turnkey Linux which is built on Ubuntu 10.04.1 JeOS.

Change the hostname through webmin to the fully qualified name that you want to use.

Confirm that both /var/spool/postfix/etc/hosts and /etc/hosts use the hostname.

/var/spool/postfix/etc/hosts didn’t seem to get updated properly and was using “trac” as hostname.

POSTFIX CHANGES

General Options:

Set postfix to use hostname

Internet Hostname of this mail system: Default (provided by this system)

the appliance is configured for “localhost” which causes a SMTP 550 invalid HELO rejection

TRAC PLUGINS:

Installed AccountManagerPlugin

$ easy_install http://trac-hacks.org/svn/accountmanagerplugin/0.11

sub-plugins (assuming the use of htpasswd file for user store)

AccountManagerAdminPage

AccountManager

HTPasswdStore

AccountChangeListener

AccountChangeNotificationAdminPanel

AccountModule

LoginModule

Configure Accounts:

You want to use htpasswd as password store which is located at /etc/trac/htpasswd

$ chgrp www-data /etc/trac $ chown www-data /etc/trac $ chgrp www-data /etc/trac/htpasswd $ chown www-data /etc/trac/htpasswd

In Trac webadmin interface -> Admin/Accounts/Configuration

HTPASSWDSTORE = true (1) filename /etc/trac/htpasswd

DISABLE TRAC’s login so you can use the form based login.

$ nano /var/local/lib/trac/git-/conf/trac.ini

and make sure the following line is in the components section

[components] trac.web.auth.loginmodule = disabled

Comment out Trac’s HTTPD CONF so that Apache does not do the authentication and popup an http dialog.

$ nano /etc/trac/apache.conf

comment out the “Require valid-user” like the example below.

AuthType Basic AuthName "Trac" AuthUserFile /etc/trac/htpasswd # Require valid-user

Installed NoAnonymousAccess Trac Plugin

$ easy_install http://trac-hacks.org/svn/noanonymousplugin/0.11

Installed IniAdminPlugin Trac Plugin

$ easy_install http://trac-hacks.org/svn/iniadminplugin/0.11

Configure Notifications in Trac:

In Trac webadmin interface -> Admin/trac.ini notification tab (or do through the actual trac.ini file)

Set always notify owner: True

Set smtp_from_name (username@host.domain.tld – that you set in previous setep in webmin)

Set smtp_from (username@host.domain.tld – that you set in previous step in webmin)

Configure Apache2 to redirect all traffic from HTTP to SSL (HTTPS)

$ nano /etc/trac/apache.conf

edit the virtual host for port 80 like so that it looks something like:

ServerName hostname.domain.tld Redirect / https://hostname.domain.tld # UseCanonicalName Off # ServerAdmin webmaster@localhost

the hostname.domain.tld is what you set the hostname in previous sections above. All browser requests to HTTP will now automatically be redirected to HTTPS.

You must restart both postfix and apache after making most of the changes above, so if something doesn’t work, try restarting the services. You should do this at the very end anyway.

$ service apache2 restart $ service postfix restart

One final note is that you’ll want to set the user permissions in trac to what ever you prefer. I removed all permissions from anonymous and added all permissions to authenticated users with a few exceptions.

$ trac-admin /var/local/lib/trac/git-permission remove anonymous '*'

The code above is the easy to remove permissions for anonymous.

There is no easy way to add all the permissions to authenticated users however….a little bit of a time consumer.

Hope that helps!

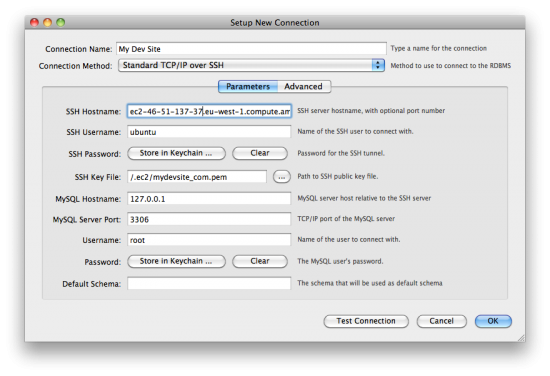

How to create a MySQL Workbench connection to Amazon EC2 server

I’ve been working with Amazon Web Services (AWS) Elastic Cloud Computing (EC2) quite a bit recently.

I’ve also started working with Ubuntu at the same time. The result is the ability to quickly spin up new instances of Ubuntu server to use for various reasons without all the hassle of new hardware, or even alot of configuration headache. Using Amazon Machine Images (AMIs), an administrator can spin up an instance that is already configured, patched, and ready to run.

Using this technique and technology that is new to me has created a bit of a learning curve, and one thing that caught me off guard was the need to use keypairs instead of username/password to access the servers, which is more secure, and is the default method for accessing AWS EC2 servers.

I was able to easily figure out how to access an AWS EC2 using a keypair with SSH command line, and Filezilla, but MySQL Workbench seemed a little more complex.

Above you can see the diagram, which shows my new connection dialogue screen.

Read the rest of this entry »